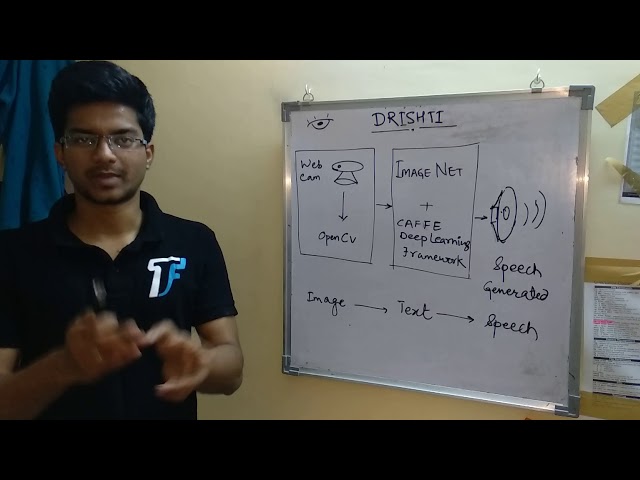

Our project Drishti focuses on aiding the visually impaired. We intend to aid the blind by making them aware of their surroundings by using our wearable and also aid them in general activities such reading a book, reading and making sense of sign boards and recognizing currency notes.

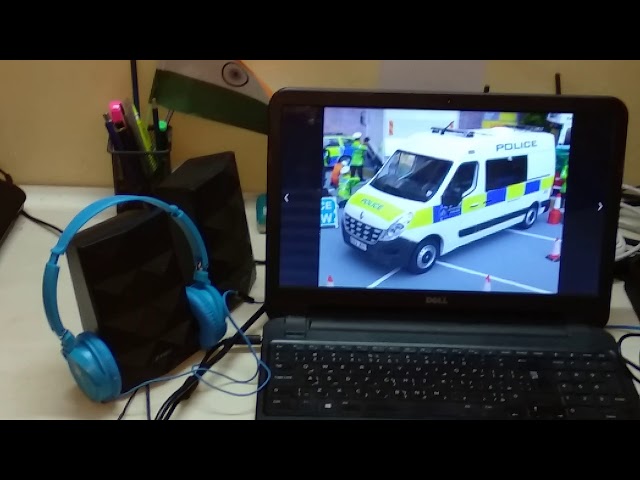

Our use cases demand near real-time processing of images captured by the camera. We have used the ImageNet database in conjunction with a Caffe powered Deep Learning Model to generate labels by performing object detection on the images. We have leveraged the processing power of the Nvidia Jetson for this purpose. The labels generated as text are then converted to Speech using the Trans Library for Linux. We also have added a feature to translate the text from English to Hindi for people who are not comfortable with English. We have used the Tessaract Character Recognition Engine for Linux that helps us perform Optical Character Recognition over the captured images. This helps us achieve our objective of aiding the visually impaired make sense of sign boards, books and currency notes.

We have developed Drishti - a wearable that is intended to be used by the visually impaired in particular. Thus with this project, we focus on bringing convenience and a sense of awareness to the visually impaired in their day to day activities.

Comments (0)