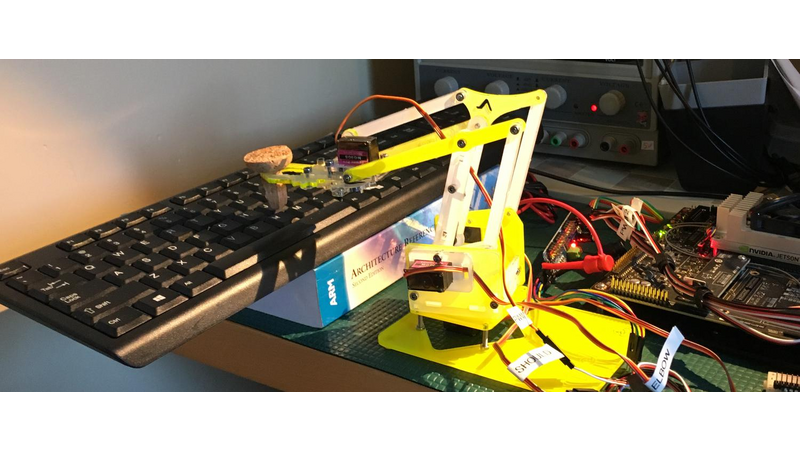

It starts with the Jetson TX2, still on its development board. The TX2 is connected via I2C to a servo controller which connects to a current monitoring system. This connects to a 4 degrees of freedom (DOF) robotic arm. I know the TX2 should rightfully control a state of the art robotic arm intended to do something wildly complicated like welding repairs in an underwater city or making perfect sourdough bread. However, the MeArm cost $50. And I want to show the advantage of incredible brains over inexpensive brawn.

At the start of this contest, the robot didn’t type, though, that was my goal project: a typing robot. Typing is a demonstration of fine motor skill and precise actuation. By making the system flexible and vision based, I can avoid creating a brittle, unusable system. My USB webcam oversees the system.

The software is written in Python using OpenCV2 for computer vision and CMU's PocketSpinx for speech detection. The code is all open source, freely licensed and on GitHub. While I am making a robot arm that types what you say to it, most of my goals for this project are about learning and teaching.

Note: I made a slide deck that describes my project with pictures and words. I recommend looking at that for the hardware and software diagrams and more detail.

Comments (0)