Atlas is an AI powered drone that can conduct fully autonomous landings while avoiding any obstructions on the ground. At its core is the NVidia Jetson TX1 module. The Jetson is the only compute module capable of running the embedded artificial intelligence routines while also meeting the highly restricted size and power requirements needed to fit on the aircraft.

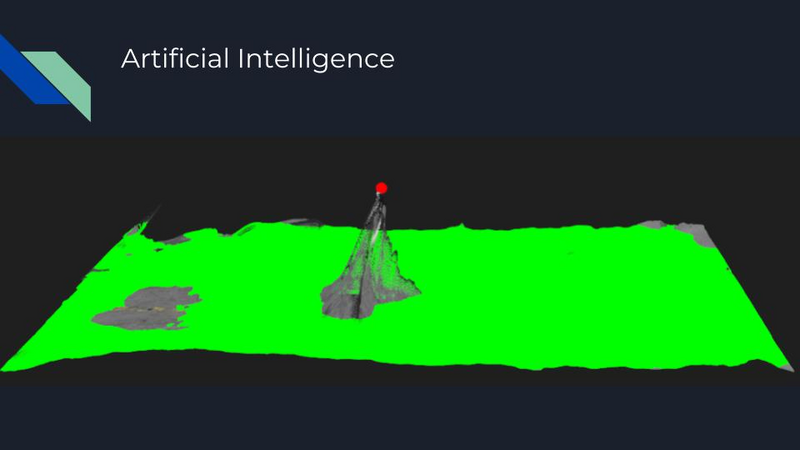

To illustrate the functionality of the algorithm, we can walk through what happens when the aircraft is commanded to return home and land using the software developed for this project. First Atlas will fly to a safe position hovering above the landing location. Then as Atlas descends, it will scan the area for any obstacles between it and the ground. If an obstruction is found, the aircraft will halt its descent and move to avoid it. Once clear, it will resume its descent until it has reached a critical height. Finally, it will then deploy the landing gear and commit to the landing.

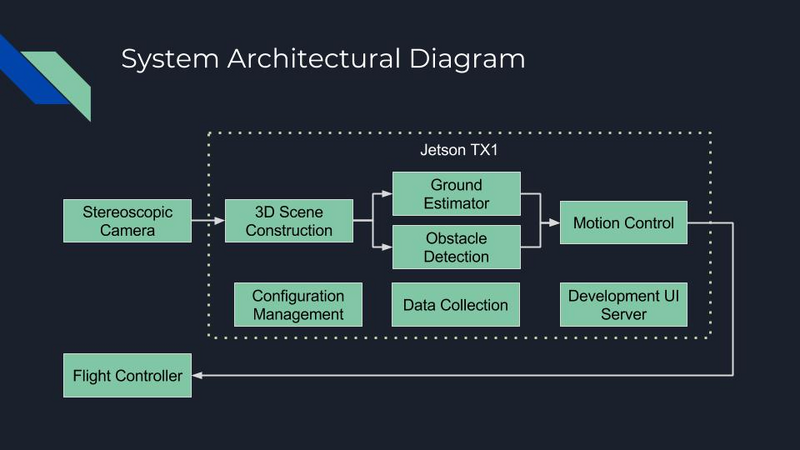

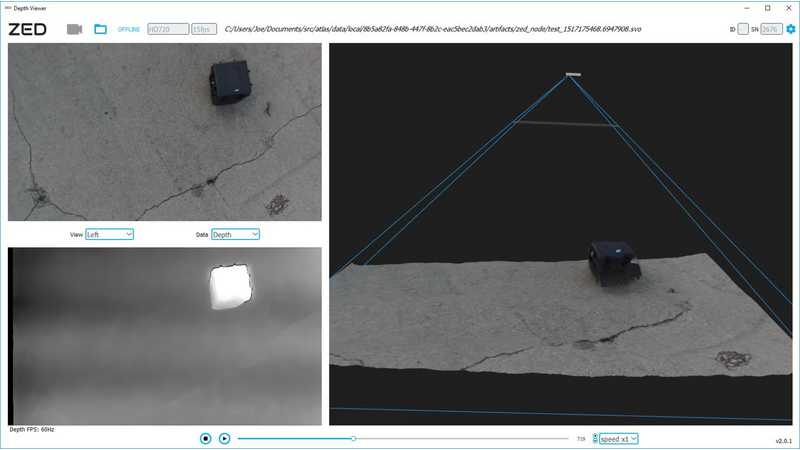

Architecturally, there are a number of pieces that come together to make the system function. In the hardware domain, we start with a stereoscopic camera which provides raw images. These images are received by the Jetson TX1 module, and used to construct a three-dimensional representation of the world around the aircraft. This model is then fed to the ground estimator and the obstacle detection modules. The ground estimator determines which parts of the model constitute areas on which the aircraft may land. The obstacle detection module searches the model for objects that could obstruct the aircraft's flight path.

These estimates of the world’s state are then received by the motion control module which determines whether to descent, move the aircraft, or commit to the landing. These decisions are then sent out as commands to the flight controller which in combination with its own built in sensors, executes these commands through its interface to the aircraft’s motors.

Beyond this critical chain are additional modules for configuration management, data collection, and a development UI server which provides the interface used by operators during the software development process.

The functionality of the system has been verified over the course of more than a dozen flight test days. The 29 gigabytes of data collected have validated the accuracy of the ground plane estimator and obstacle detection modules. The computational power of the TX1 has also been shown to be capable of updating these metrics at 8 Hertz which has been more than sufficient for powering the motion controller.

The problem that this is trying to solve is simple. For every drone being operated today there is a human pilot watching over it. Their job is to ensure the safety of the aircraft’s flight; preventing it from flying into any structures or other aircraft and ensuring a safe landing at the end. This requires the pilot to have constant attention on the aircraft and precludes them from operating multiple aircraft at the same time. If a company wants to survey a large area or conduct a long mission they simply have to keep adding pilots until they have enough to cover all the aircraft. This limits both the economic viability as well as the scope of missions.

The technology presented here is the first step towards drones that can intelligently interact with the world around them. Goldman Sachs forecasted that the global drone industry represents a 100 Billion dollar opportunity by 2020. This technology can make those drones safer and more cost efficient to employ. It can enable them to go places that are unsafe for humans and unlock new capabilities such as swarm technology. While this project is only the first part of that vision, it has laid the groundwork for many more future innovations yet to come.

Comments (0)