Recent natural disasters have once again illustrated the need for better means of searching for survivors, performing damage assessment, and coordinating recovery efforts. Unmanned aerial vehicles (UAVs) can be quickly deployed onsite to provide an eye in the sky to save lives and help rebuild, but it can be difficult for an operator to manually inspect the video coming in from a UAV. It's even more challenging to take these aerial views and build a map of damage or other representation that can be passed to rescuers, aid efforts, or insurance companies.

The ability to perform on-site or even on-sensor object detection within these video feeds would allow for immediate identification of areas of interest. Focusing on damage assessment, buildings could be identified, located in the sensor view, and classified based on the sustained damage level. Combined with knowledge of the sensor’s position and camera angle, the location of damaged structures could be automatically georeferenced. In a single flyover, a detailed assessment could be generated for use by recovery efforts or insurance companies.

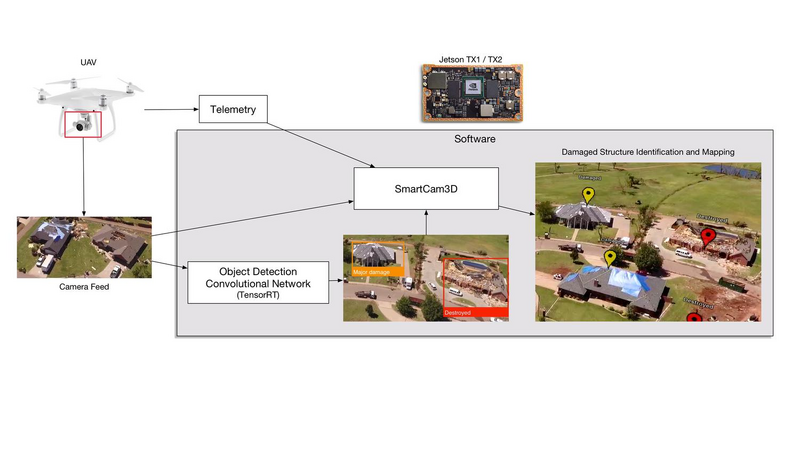

Perceptual Labs (a member of NVIDIA's Inception program) has been developing convolutional neural networks and supporting software for realtime object detection on mobile and embedded hardware. We've partnered with Rapid Imaging Technologies, a pioneer in augmented reality technologies for UAVs, to apply this object detection to perform airborne damage assessment. Together, we have built a software package that performs realtime object detection of storm-damaged buildings, analyzes damage levels, marks their location, and displays that information as part of an overlaid augmented reality video stream. All of this runs on a Jetson TX1 or TX2 and processes live video from the UAV.

Onsite, realtime processing of UAV video is critically important for relief and recovery efforts, because network connections and bandwidth will be spotty or nonexistent. The Jetson TX1 / TX2 is rapidly becoming adopted as standard computing hardware on UAVs as well as in embedded analysis systems on the ground. Software running entirely on a TX1 / TX2 without the need for network connections would greatly aid disaster relief and recovery efforts.

As a proof-of-principle demonstration of this technology, a UAV flyover was performed in Elk City, OK in partnership with Embry Riddle Aeronautical University in mid-2017 in the aftermath of an EF2 tornado. Images and video were captured from intact, damaged, and destroyed building at various camera angles. A training dataset was built from hand-labeled bounding boxes identifying structures that were damaged (visible external damage but without the interior exposed to the elements) and destroyed (open to the air or completely leveled). [Further datasets incorporating the Federal Emergency Management Agency’s (“FEMA”) four damage-assessment categories (Affected, Minor, Major and Destroyed) are in the works.]

A custom convolutional neural network was trained on this dataset using NVIDIA's DIGITS software and the Caffe framework. The network used the same inputs and outputs as NVIDIA's DetectNet architecture, but used a completely different internal structure that was optimized for performance while maintaining accuracy. This network was then placed in custom software on iOS to test its performance when running on mobile hardware, and then finally incorporated into software running on Jetson TX1 / TX2.

The Jetson-based software utilizes NVIDIA's TensorRT, and has the ability to perform frame-by-frame object detection at over 30 frames per second on live video or prerecorded movies. In its simplest configuration, it can provide overlaid bounding boxes for damaged and destroyed structures, determined on a per-frame basis. In a more useful mode, these detected objects are fed into Rapid Imaging's SmartCam3D software running on TX1 / TX2 which places them in the real world based on onboard UAV telemetry. Confidence in detections is built through multiple detections at multiple angles of the same structure.

The video that accompanies this application demonstrates the live object detection running natively on Jetson TX1 / TX2, Rapid Imaging's SmartCam3D augmented reality overlays working on the same platform, and the synthesis of object detection and augmented reality overlays. We believe that onsite or on-sensor realtime damage assessment and feedback to operators can tremendously aid disaster relief efforts and save time and money for insurance agencies helping people get back on their feet.

We are jointly developing this software product to serve these needs and have demonstrated its function with the proof-of-principle presented here.

About the team:

Perceptual Labs has grown out of work by Dr. Brad Larson in the field of machine vision on mobile devices. His open source GPUImage framework is in use by thousands of iOS applications to perform GPU-accelerated image and video processing. The lessons learned from optimizing GPU-based operations for video processing in that project have fed directly into Perceptual Labs' work on mobile and embedded machine vision using convolutional networks and other techniques. Perceptual Labs was recently selected to be part of the NVIDIA Inception startup program.

Rapid Imaging has grown out of work by augmented reality pioneer Mike Abernathy who developed one of the first successful applications of augmented reality in 1993 to track space debris. Since then, Rapid Imaging has continued to develop augmented reality and synthetic vision solutions designed to bring a geographic context to live video. SmartCam3D, the company’s core product, was first utilized by NASA as the primary flight display for the X-38 Crew Return Vehicle. Today, it is fielded in all US Army UAV Systems, including the Shadow and Gray Eagle Ground Control Stations. In addition, the technology has been ported to small UAS via an iOS application and is applicable to any camera with a known location, (via stationary Lat/Long or dynamic positioning information provided by a complementary GPS system) airborne or otherwise. Recent deployments of the technology have included use in disaster response and emergency management efforts, including in small UAS overflights of flooded areas in the Midwest.

Comments (0)