Every year, farmers struggle to minimize crop damage done by disease. In 2016 alone, it's estimated that 817 million bushels of corn were lost to disease. Early identification and treatment of these diseases could be a tremendous help to farmers, but it's impractical to have trained specialists walking every field. What if these diseases and other problems (such as malnutrition, weeds, or insect damage) could be instantly and accurately diagnosed by handheld devices, hardware on agricultural equipment, or autonomous robots?

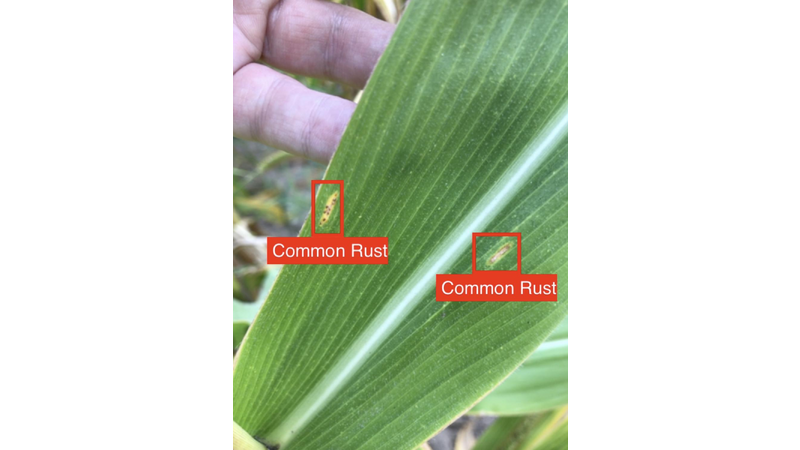

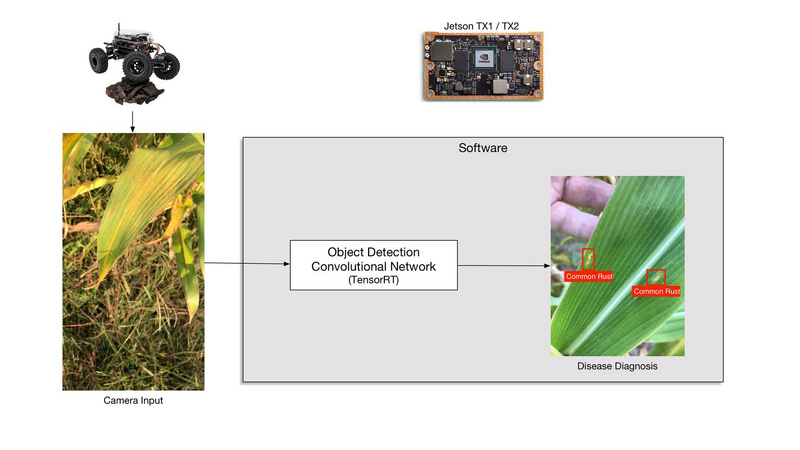

Agricultural Intelligence has set out to solve this problem through software that performs realtime diagnosis of crop health on mobile and embedded hardware. We have developed software that runs on NVIDIA Jetson TX1 and TX2 computers which can perform realtime detection, classification, and localization of disease down to individual lesions on the leaves of common crops. This object detection runs at up to 60 frames per second on live video from a camera, and does so entirely on device. In our current best disease cases, this has an over 80% diagnosis accuracy with a false positive rate of below 1.5%.

Agricultural Intelligence is a joint venture between Perceptual Labs (a member of NVIDIA's Inception program) and Ag AI. Perceptual Labs brings expertise in machine vision on mobile and embedded devices, and the founders of Ag AI have significant agricultural experience and expertise. Together, we have worked to apply recent advances in convolutional networks for object recognition to the challenges of agriculture. As a note, Perceptual Labs has also submitted another entry to the Jetson Developer Challenge as part of a partnership with Rapid Imaging Technologies for on-UAV damage assessment.

In 2017, Agricultural Intelligence started deploying an application called Pocket Agronomist into field testing with farmers and agronomists. Pocket Agronomist is an iOS application that performs live disease diagnosis and localization using a convolutional network that operates against the live video from the device's camera. When a disease is diagnosed, a farmer can mark its location in the field, transmit this to experts, and be presented with encyclopedic knowledge about the disease and any mitigation steps.

This application uses a convolutional network trained against a massive dataset of crop disease images taken in the field under varying conditions. These images have disease regions labeled within them, in order to train a convolutional network for object detection and not just single-image classification. This allows for a more precise diagnosis and for multiple diseases to be diagnosed at the same time on a single leaf. These images were acquired by a distributed network of farmers and agronomists throughout the 2017 growing season and from repositories of images they had collected in previous seasons.

All training of these networks was done using NVIDIA DIGITS and the Caffe framework. The development process of this application is descibed in detail here.

A handheld application for diagnosing disease is extremely useful, but it is still tied to a human operator. Indications are that agriculture is starting to move towards greater autonomy for robotic monitoring and harvesting of fields. IdTechEx projects in their report "Agricultural Robots and Drones 2018-2038: Technologies, Markets, Players" that fleets of small, autonomous agricultural robots could account for a $900M and $2.5Bn market by 2028 and 2038, respectively. These robots will need the ability to detect disease, weeds, and other yield-reducing actors in the field.

Additionally, there is a significant opportunity to retrofit monitoring capabilities onto existing agricultural hardware. Vehicles that are already moving down the rows in a field for other purposes could have monitoring cameras and embedded computing hardware mounted on them to detect and report disease and other problems.

Agronomic knowledge, particularly as it pertains to disease diagnostics and the visual identification of lesions, is an attribute developed over years of education and experience. Fully-trained agronomists, who often also act as salespeople for their employer, are busy during the growing months and challenged to meet all the demands associated with disease diagnostics. Having a trained agronomist on-site to constantly monitor every growers’ field is an impossible task, particularly in rural areas where a single agronomist may cover many customers over a large geographic area. Therefore, deploying a trained network to a handheld, or, better yet, autonomous device to perform monitoring is a significant advantage.

The Jetson TX1 / TX2 provide an ideal platform for the embedded computing needed for autonomous agricultural robots and vision-based retrofit kits for existing hardware. It's for this reason that we've taken the trained networks and software developed for Pocket Agronomist and brought them to the Jetson TX1 / TX2.

We are using software built around TensorRT on the Jetson hardware to run optimized versions of trained object detection convolutional networks on live camera video, still images, or prerecorded movies. These networks share similar inputs and outputs to Nvidia's DetectNet object detection network template, but have completely redesigned internals that are optimized towards realtime performance on embedded hardware.

The software we have developed can perform per-frame disease diagnosis on incoming video at over 30 frames per second, fast enough to provide low-latency responses in the field as part of a robot's vision system. The accuracy and viability of this disease detection has already been demonstrated through field trials via the Pocket Agronomist application, and the newly developed ability to run on Jetson TX1 / TX2 provides a proof-of-principle demonstration of its ability to act as part of the vision system for autonomous agricultural robots.

In recent conversations with farmers, cooperatives, independent retailers, and government agencies, we’ve found a consistent message as it pertains to field monitoring via human, drone, airplane, satellite or otherwise; these entities would enjoy additional monitoring of their fields, but they question the cost and reliability of the information they are provided. Many monitoring services currently available to growers in the agricultural market relate to remote sensing technologies or “boots on the ground” crop scouting interns. Each has its pitfalls as it relates to cost and reliability.

If a low-cost solution existed that could leverage the knowledge and experience of a trained agronomist but do so at a price that was manageable to a grower, many more disease outbreaks would be found and yield would be saved. By deploying trained convolutional networks to embedded devices such as the NVIDIA TX1/TX2, we believe we can begin to tackle this challenge.

Comments (0)