In June 2016, NVIDIA released JetPack 2.2 for the ARMv8 powered Jetson TX1. The release of unified 64 bit kernel, userspace, and CUDA 7.5 libraries significantly increased performance per Watt over the previous JetPack, which was limited to 32-bits, and inspired us to investigate Jetson Deep Learning.

In December 2017, NVIDIA released JetPack 3.2 DP for the Jetson TX2 which significantly improved performance of TensorRT and cuDNN. Our team has incorporated updates from the ARM Performance Libraries and Jetsonhacks Tensorflow HOWTOs to maximize performance on serial Tensorflow. We then parallelized it with MPI and scaled up.

Demonstrations on production codes and traditional benchmarks have shown the JTX2 to be on an aggressive path that will put us back on a Moore’s Law trajectory as we approach the exascale era. The interplay of ARM commands and UMA-aware CUDA 9 code has drastically increased embedded performance relative to their discrete analog.

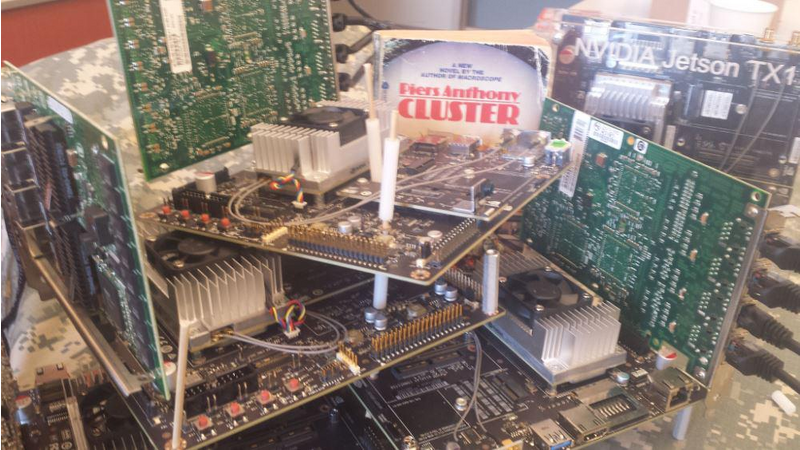

NVIDIA had already made significant inroads into Supercomputing, with Maxwell and Kepler (and now Pascal and Volta) cores as the accelerators of choice. The combination of the GPU and CPU into the same silicon die has made the NVIDIA Jetson platform competitive in price, performance, energy-efficiency and physical footprint. Indeed, as we port codes from servers with PCI buses into embedded systems that use shared memory, we achieve dividends in both power and performance, giving us better real-time performance and bigger problem spaces.

We hope to be able to experiment with Volta class cores in an embedded package soon with NVDLA. An architecture built around Volta should be competitive with Google's TPU clusters. Until then we will develop daughterboards and cluster improvements in our Blamange Project to steadily improve machine learning speeds. We will likely try to demo this at the next Supercomputing Conference, ideally at the student cluster contest.

(N.B. Tensorflow was a competition code at the ISC17 SCC. Ours was the only team using Jetsons to work on it.)

Comments (0)