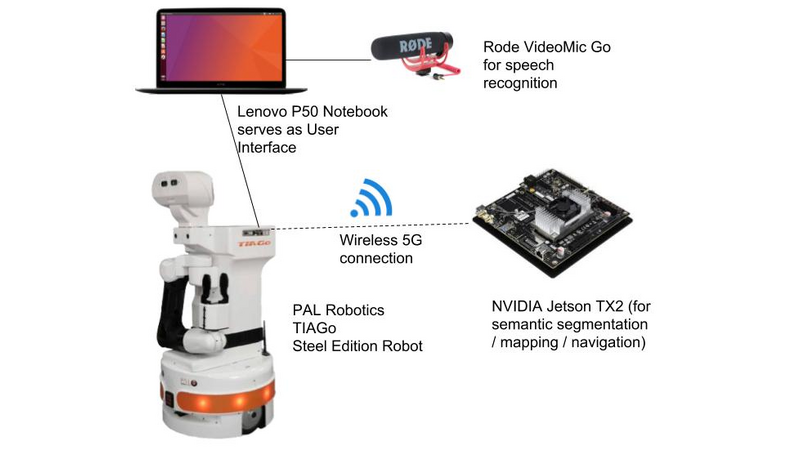

We attached a NVIDIA Jetson TX2 to a PAL Robotics TIAGo robot. The Jetson development board extends the robot by serving as a vision module. We developed a custom approach for segmenting objects in image space inspired by current segmentation networks. Through the robots RGB-D camera we can project the resulting segmentation into a local robot coordinate

system for manipulating objects. We integrated the semantic segmentation into a practical use case where we propose a state dependent segmentation method. The robot navigates to a fridge, searches for a handle to open it. Once the the fridge is open, the robot takes an other look for segmenting beer bottles and cans inside the fridge and grasps them. In the end the fridge is closed and the beer is delivered. The current focus of use for the Jetson TX2 is the

execution of our custom semantic segmentation network. However other custom robot modules like navigation, mapping and speech recognition can be exe cuted on the Jetson as well.

The resulting ROS object segmentation package is available here (containing a description on how to run it to test it yourself):

https://gitlab.uni-koblenz.de/robbie/homer_home_net

Comments (0)