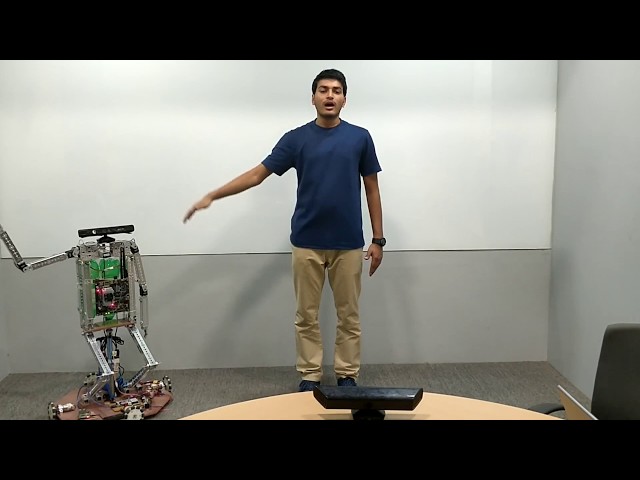

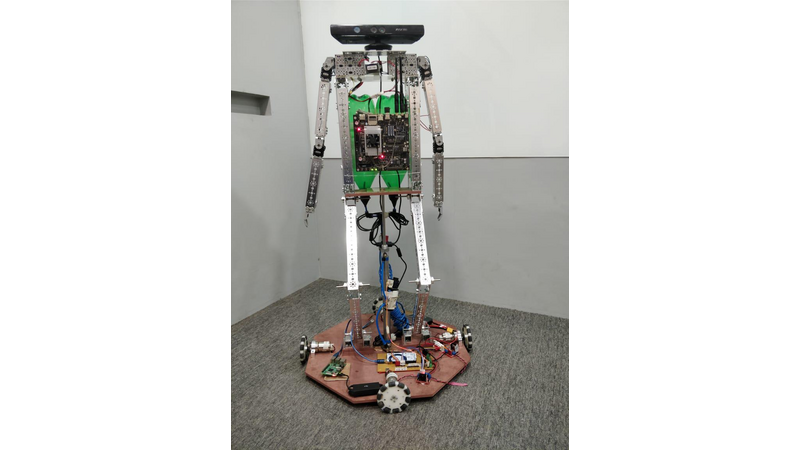

We propose Human-in-the-Loop feedback to increase the problem solving capability of our system while maintaining the performance during intermittent communications. We aim to develop a robot capable of replicating human actions in real-time so as to achieve the same effect that would be caused by a human being if they were to be sent for the mission. This process of using imitation as a substitute to conventional coding and control of the robot will result in accurate human-like motor skills that are often required in critical situations. Such a real-time imitation process can be extended to form a learning model resulting in a better decision making process in case of communication failure. Subsystems Overview:- Mechanical:The robot is primarily a humanoid mounted on an Omni-Driven base. The key advantage of omni-drive systems is that translational and rotational motion are decoupled for simplified navigation. This will allow the robot to move around swiftly.The structure would be having two manipulators (arms) which would mimic a remote human operator. The manipulators would compose of aluminium links housing servo motors which allow their movement in space.The robot has 3 degrees of freedom in both arms (shoulder adduction, shoulder flexion, elbow flexion) along with pneumatic system for elevating the robot’s manipulators.

Electronics and Sensing: An operator will perform an action in the control station which will be sensed by the Microsoft Kinect depth sensing camera. The movement of the operator will be tracked and sent to the robot for replication, thus the robot’s manipulators would be completely dependent on the action of the person. Such a Human-in-The-Loop system would enable the robot to carry out complex actions at the disaster site. The human would be able to control the robot’s actions based on the visual feedback received from the robot.Another Kinect camera is placed on-board to perceive the environment in 3D.This will facilitate the generation of the sitemap. The robot will be equipped with two on-board computers i.e. one embedded controller and the Nvidia Jetson. The embedded controller (atmega 2560) will be used for controlling the motion of the robot’s base. The Jetson is the brain of all the computation. The Jetson receives manipulation data from the remote centre. It also generates the 3-D map from the camera feed from Kinect obtain and relay them to the command centre. The live camera feed will help the remote human operator to visualize and understand the robot’s environment allowing him to swiftly control the robot’s actions.

Software:The various entities involved in the project exchange data through ROS (Robot Operating System). ROS is based on publish-subscribe architecture which allows different nodes to publish data to a master and subscriber data from a master. The master has registration API’s which allow the nodes to register as publisher, subscriber or both. ROS uses standard TCP/IP sockets to transport the message data. ROS allows us to run various subsystems on separate nodes that are independent of each other. This architecture is of utmost importance in critical tasks like defence operations. This has an advantage over conventional robotic hardware coding such that even if one subsystem fails, only that particular node is affected and the rest of operations running on other nodes continue without interruptions.

Let’s talk

Log in

Not a candidate? Sign up as an employer

Reset your password

Remember your password? Log in Log in for business

Create an employer account

Sign up for free.

Select the best plan to publish job ofers & challenges.

Not an employer? Sign up as a candidate

Comments (15)

KD

Kishan Dhanrajani

s

sdeengg_loo

AN

Ashish Narvankar

j

jiten_thakor

SS

Sagar Shisodia

vj

vidya jadhav

is

itsmeyateen shinde

SK

Sushant Kotawade

RJ

Ruchita Joshi

LD

Leena Deshmukh