With recent breakthrough advances in deep learning technologies, many robots can have their own autonomy. However, still, there are several limitations on implementing deep learning-based robotic intelligence. First of all, when it comes to a small-sized and agile robot, a visionary perception that demands large computing power cannot be operated due to the limited computation power. Moreover, although reinforcement learning is showing surprising performance in some motion planning problems, application specific training methods of reinforcement learning lack their usability on other robotic platforms.

Inspired by the current tendency of utilizing deep learning on robotic platforms, we devised a noble methodology to integrate state-of-the-art deep learning into a high-speed robotic system with a simple implementation.

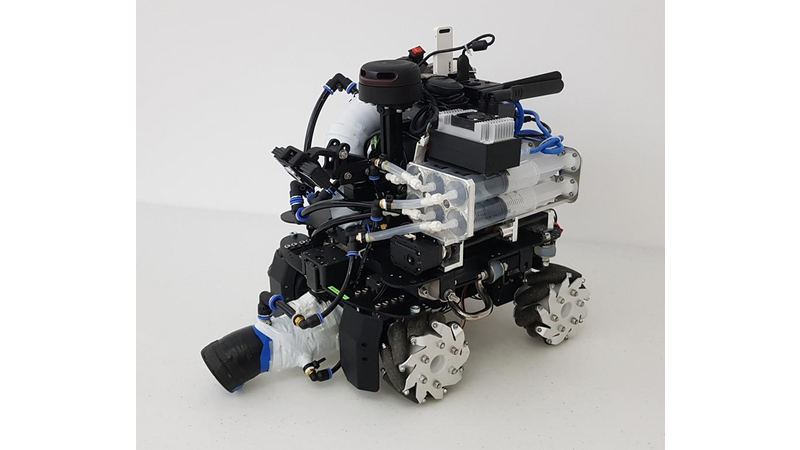

The proposed methodology is implemented and evaluated on a developed robot, TT-bot. TT-bot is the general mobile robotic platform which can be used in indoor object collection. The robot is required to recognize targets precisely and plan the path towards the targets effectively. We utilized deep learning technologies in both perception and motion planning to improve robot's performance.

Perception

Unlike other applications, limited computing power remains as a major hindrance when utilizing the deep learning-based approach in a mobile robot recognition system. Considering high-end hardware requirements, embedding this kind of network in a robot would severely decrease a throughput of the robot’s recognition system.

To effectively integrate a large deep neural network in a mobile robot which requires a control high bandwidth, we designed a new robot software framework for an Asynchronous Deep Classification Network (ADCN). The key components of ADCN are the fast visual perception, the deep classification network, and the multi-body tracker. After the fast visual perception recognizes target objects' brief positions and registers them to the multi-body tracker. While the robot tracking and moving towards the targets, it asynchronously operates the deep neural network to precisely classify the targets. The data flow among these three components is established by introducing one more component, an abstraction map, as a data hub for the components and additional sensors. Operating ADCN on Nvidia mobile GPU, Jetson TX2, we developed a fully-standalone mobile robotic platform.

Motion Planning

To ease the complexity of training and to improve the extensibility of the algorithm, we propose the Gaming Reinforcement Learning-Based Motion Planner (GRL-planner) for a mobile robot. The key to this approach is to provide the reinforcement learning-based motion planners with the formatted abstraction map, as a training data source to improve a system’s compatibility. The ten actions (eight directions from the body, left turn, right turn) are used as an output of reinforcement learning network to control the developed holonomic mobile robot, TT-bot. The asynchronous advantage actor-critic (A3C) algorithm is used for reinforcement learning, which shows a cutting-edge performance in video game AI.

Since the proposed abstraction map can be considered as a general data format that integrates various types of sensor information, this approach can provide a general ground for the planner regardless of the combinations of sensors. It also provides convenience in development of simulation environment. Since the 2-D map is highly abstract with discrete data, building the simulation environment of the map is much less complex than a 3-D environmental simulator.

Mission and Robot Design

To evaluate the proposed methodology, a universal mission for a mobile robot is designed. The designed mission for a robot performance evaluation is an indoor multi-target tracking and collecting task. The robot is required to search, explore, navigate, and interact with an environment in order to accomplish the task. Specifically, the robot tracks and collects small objects with the specific forms spread over an indoor ground. As a result, we built a standalone multi-target tracking and collecting indoor robot, TT-bot which has two main mechanical aspects; holonomic platform and variable-diameter nozzle.

The robot was developed with the intent to represent the general purposes of mobile robots that consist of recognition, navigation, and interaction in order to demonstrate the functionality of the proposed system architecture. Thus, the chassis of the robot is equipped with 4 mecanum wheels with holonomic movement capability. To secure stable vision, the platform and chassis are mechanically isolated by assembling them with silicon dampers, guaranteeing a very stable video stream. This structure absorbs any wobbling or even damage to the cameras and processors from physical impulses caused by rapid acceleration.

For its end-effector, a suction motor is utilized to demonstrate the interaction between the targets and the robot. According to the detected target's size and weight, the inner radius of the nozzle is controlled to optimize collecting performance. The mechanism behind the variable-diameter nozzle is a flow speed increase due to a decrease in diameter; an air pouch for volume control is formed between the inner surface of the nozzle and a coaxial cylindrical latex membrane along the inner surface. While maintaining the steady power of the suction motor, the robot generates variable air flow pressure and speed by controlling the cross-sectional area of the nozzle to collect objects of various sizes and weights.

Comments (2)

m

mathcaesar

c

cyh603